Secton Under the Microscope: Pricing, Performance & Security

Introduction

We stumbled upon Secton, an AI-as-a-service startup, while browsing through its developers’ open-source projects. Four hours later, we had dug up several security lapses (see When Security Is Not A Factor: Secton), but the platform’s marketing claims, pricing, and performance also brought forth some raised eyebrows.

What is Secton?

If you didn't read our previous article, Secton places itself alongside OpenAI and Anthropic, claiming to have "Modular APIs," "Human-first UX," and "Security baked in," while claiming to be a cost-friendly option for developers.

Pricing & Performance Concerns

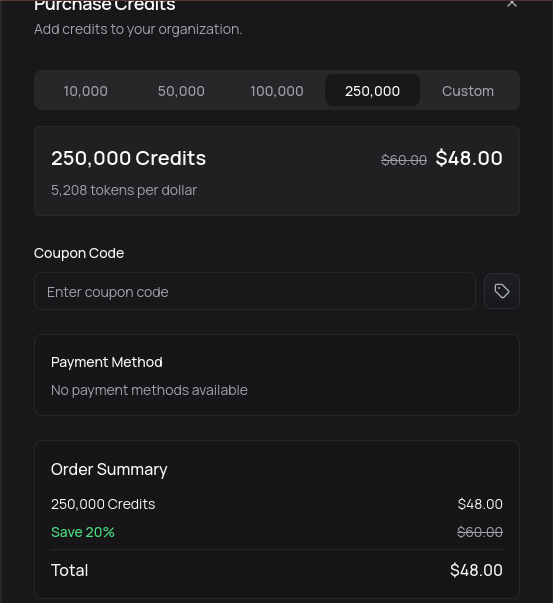

I have a large concern beyond their security practices, and that is, their pricing schema and performance issues. They charge around 48 USD for 250,000 tokens from an AI model that performs at a rate of about 10-25 tok/s and is noticeably slower and less capable than GPT-3.5 or GPT-4.

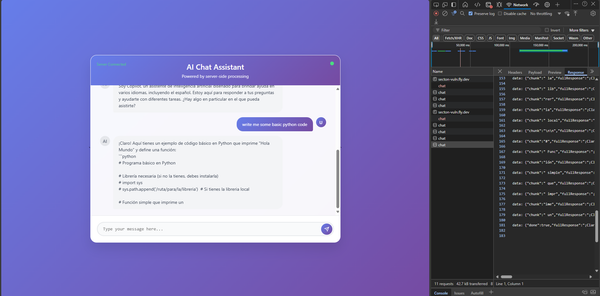

Below you can find a quick test I put together, we call the /chat/completions endpoint with the content for the user being "Explain the fundamental concepts of relativity in detail.", we start with 50,000 credits, getting through 72, of the other 928 that didn't make it a large amount are AI endpoint errors, some 20ish being the rate limit due to running out of credits, and lastly a "FUNCTION_INVOCATION_TIMEOUT" which seems to be returned by Vercel, though I'm unsure.

All tests below used the same basic prompt and measured end-to-end latency.

=== Summary (secton/copilot-zero) ===

Successful calls : 72 / 1000

Avg completion tok: 682.29

Avg total tok : 693.68

Avg tokens / sec : 22.13

Total tokens aprx : 49944.96

Cost aprx : $13.00=== Summary (openai/o1-mini) ===

Successful calls : 100 / 100

Avg completion tok: 2555.82

Avg total tok : 2572.82

Avg tokens / sec : 164.16

Total tokens : 259656

Cost : $3.10=== Summary (anthropic/claude-sonnet-4-20250514) ===

Successful calls : 54 / 100 (50/rpm rate-limit)

Avg completion tok: 1310.37

Avg total tok : 1328.37

Avg tokens / sec : 45.71

Total tokens : 73293

Cost : $1.09Not only that, but that's the current discounted version, assuming it was ever more expensive than that, with it in theory being 60 USD for that amount. Furthermore, it's unclear how they charge you; is it similar to OpenAI, with the cost for input and output differing, or is it referring to the total tokens you receive?

Not only that, but the pricing is, for the most part, higher than what OpenAI charges for what can be considered some of the best models in the world: o1 and o3. Even if you compare it to Anthropic's API cost, which are considered higher than the norm, Secton is still more expensive than Claude Opus 4 and Claude Sonnet 4!

At a basic glance, it felt significantly overpriced for its throughput: a slower, weaker model with a near OpenAI level of cost.

Uptime & Reliability Concerns

Beyond the issue of the price, what grips me is the performance of their API, with it half the time failing randomly with no clear indicator why, and thankfully, it doesn't get credited to my organization. But it is still annoying to work with a tool that can't even maintain its uptime well, with one person calling the API at most 1k down to 100- 200ish times, being able to prevent any other customer from interacting with the model.

AI-Generated Code & The Security Culture At Secton

As more companies make use of large language models to build new software, the code generation itself can introduce widespread security issues. Many sections of Secton’s API and UI felt like they had been generated by an LLM without thorough developer review. With a noticeable Lack of testing, and minimal QA for code vulnerabilities. And what feels like uninformed implementation, as rate-limit logic is done incorrectly or only half-done.

Either showing a lack of developer involvement, or blindly trusting the model to do their work for them. Or, developers who appear to suggest limited secure-coding experience.

With claims such as the above being hard to reconcile with the ‘Security baked-in’ claim for a platform that seemed to treat security as an afterthought.

Now, do you remember the bug on their https://copilot.secton.org/api/chat endpoint? No, well pretty much it would provide free access to their LLM named Copilot, which was suppose to be have guest limiting, but they just didn't implement that. And later on when discussing with the developers, we learned that this bug was known, and just not fixed until we started to bring it up, and the argument for why it wasn't fixed was that:

"our primary focus has been the platform and an upcoming product"

Which I like to think/hope was a poorly made-up excuse, given security should be your first goal, rather than pushing out AI-generated or horribly written products.

My Gripe With the Developers

While all their bugs are now fixed/patched and now working hard in production, they could at least work on ensuring their authentication and authorization are built well. All these issues would have been solved if they had just done auth correctly, and for a company that appears to make money off providing AI to developers, it's a really interesting practice to knowingly have unauthenticated routes and just shrug them off. While their core purpose is to provide AI models at a cost to customers, who, if they knew these bugs could just decide to make use of them instead, never report them, and have almost no worry at all.

"funnily enough, I was going to add auth to ai compute before anybody exploited it directly"

Credits

- noClaps, for locating the initial bug in Secton Copilot, you may find him at zerolimits.dev

- RollViral, for assisting in testing and locating bugs

Full disclosure: All specific findings were responsibly reported and, according to Secton, patched as of June 24th, 2025. See the companion post "When Security Is Not A Factor: Secton" for details.